Research Interests

We advance trustworthy and privacy-preserving systems through research in AI, secure infrastructures, and decentralized computing for reliable and ethical real-world impact.

Research Projects

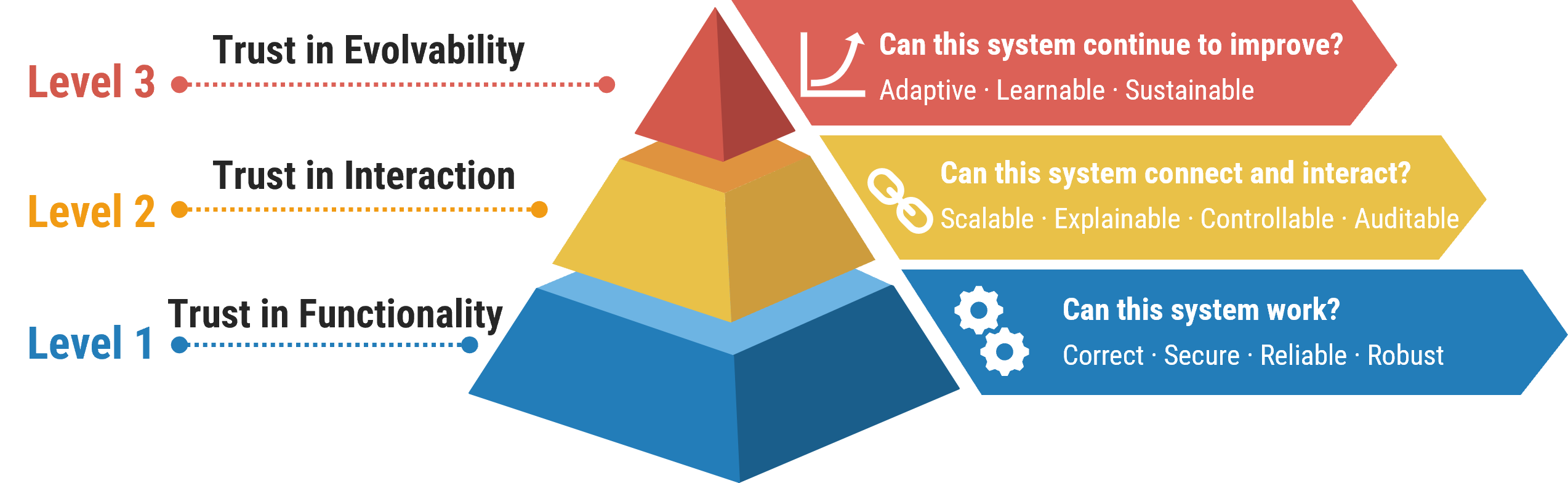

3-layer trust building

Trustworthy Systems

This project builds robust and explainable infrastructures that align technical reliability with human values — integrating distributed systems, blockchains, AI, and networking to create transparent and ethical platforms.

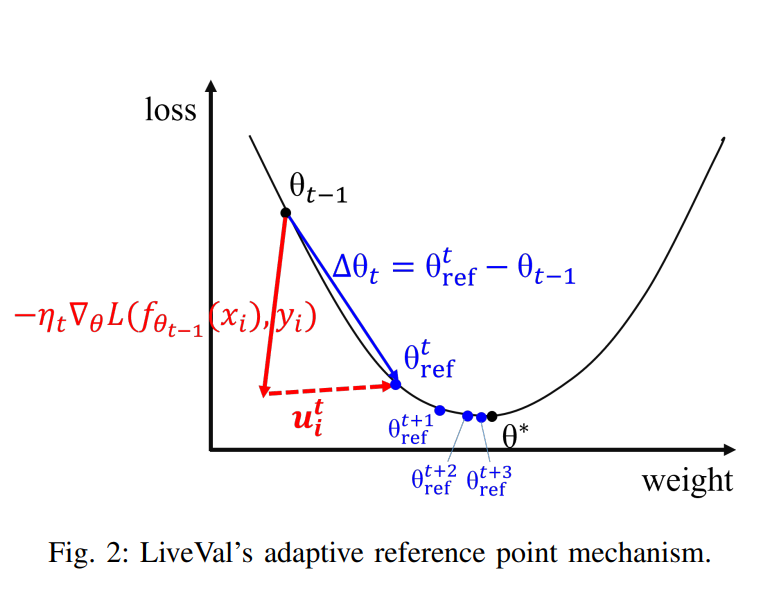

LiveVal: Time-aware Data Valuation via Adaptive Reference Points

AI Safety

LiveVal, an efficient time-aware data valuation method with three key designs: 1) seamless integration with SGD training for efficient data contribution monitoring; 2) reference-based valuation with normalization for reliable benchmark establishment; and 3) adaptive reference point selection for real-time updating with optimized memory usage.

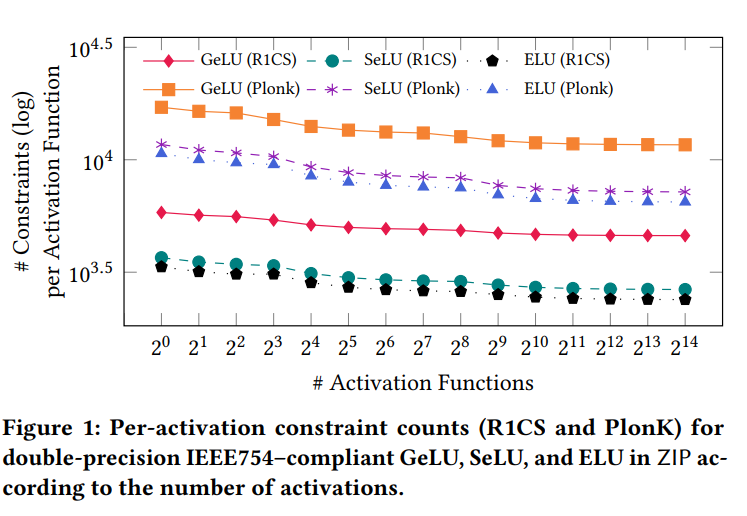

Zero-Knowledge AI Inference with High Precision

Trustworthy Systems

ZIP, an efficient and precise commit and prove zero-knowledge SNARK for AIaaS inference (both linear and non-linear layers) that natively supports IEEE-754 double-precision floating-point semantics while addressing reliability and privacy challenges inherent in AIaaS

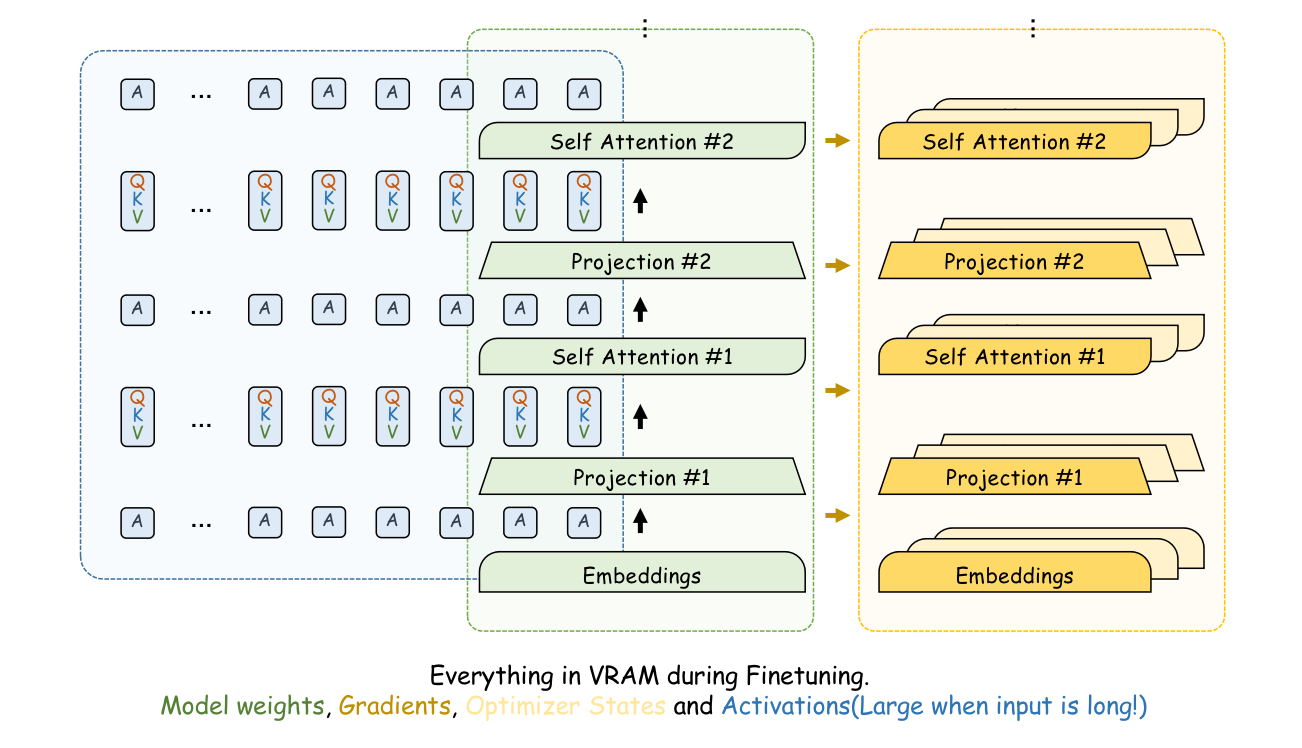

Long Context Fine-tuning

AI Safety

This project explains how to fine-tune large language models for long-text inputs beyond 32K tokens. It discusses the main challenges — high memory usage, inefficient batching, and quadratic attention cost — and introduces techniques like GQA, gradient checkpointing, LoRA, sample packing, and Flash Attention to address them. The author demonstrates these methods with the “Faro” model series, showing clear performance gains on long-context benchmarks.

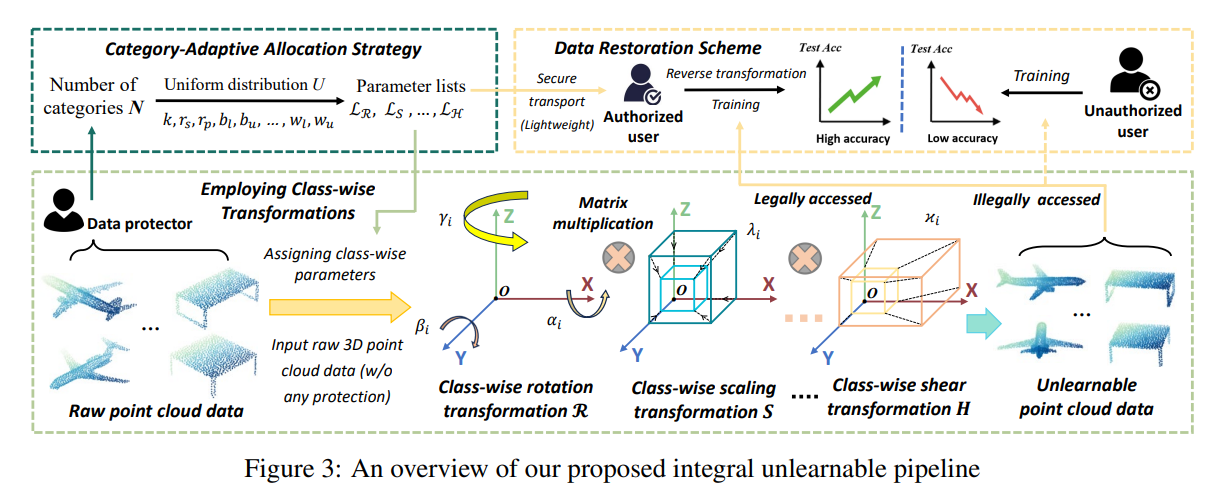

Unlearnable 3D Point Clouds: Class-wise Transformation Is All You Need

AI Safety

we propose the first integral unlearnable framework for 3D point clouds including two processes: (i) we propose an unlearnable data protection scheme, involving a class-wise setting established by a categoryadaptive allocation strategy and multi-transformations assigned to samples; (ii) we propose a data restoration scheme that utilizes class-wise inverse matrix transformation, thus enabling authorized-only training for unlearnable data